Jason Derenick, Ph.D., CTO @ Exyn, explains how our software package can perform fully autonomous flight with the push of a button

By Jason Derenick, Ph.D., CTO of Exyn Technologies

The Exyn A3R™ (Advanced Autonomous Aerial Robot) system is our full-stack solution for real-world, commercial drone applications. It is fundamentally composed of three separate ingredients:

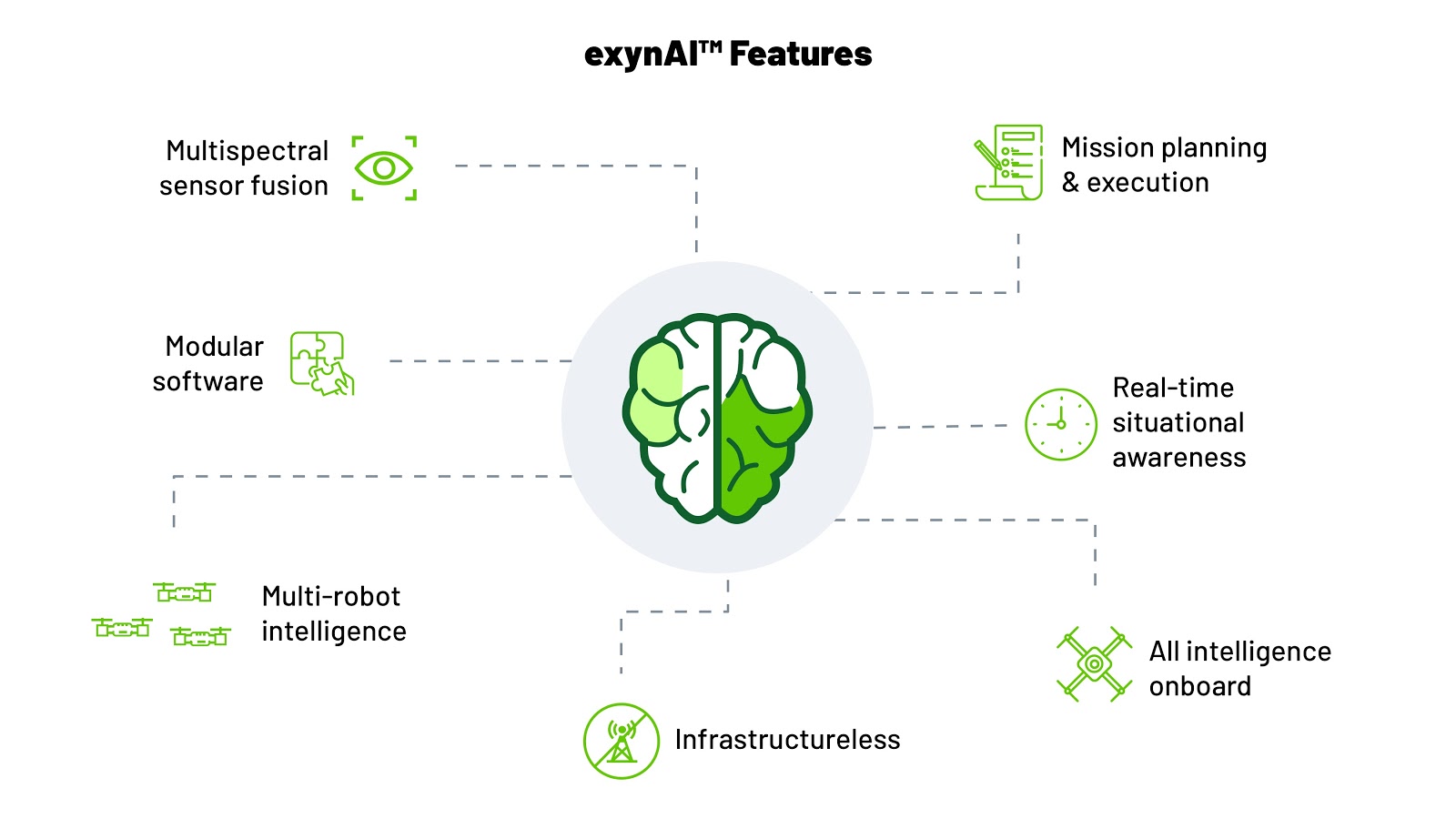

Since drone hardware is already commercially available and because the Exyn FlightGuide will be addressed in a future blog post, I will focus this article on the third ingredient, ExynAI software, and what makes it so unique.

The beauty of ExynAI is that it has been architected from the ground-up on first principles to enable push-button, fully autonomous flight without the need for infrastructure to ensure safe and successful operation. In this context, when robots (more precisely, autonomous vehicles) depend on infrastructure it means they require one or more of the following: external signals such as GPS or ultra wide-band to enable navigation, persistent communications with an off-board “ground control station” for tethered operation, or a priori information such as pre-existing maps of the target operating environment.

For robots controlled by ExynAI, external signals such as GPS are just “signals of opportunity” in that they can be leveraged but are not necessary. Similarly, wireless communications can be exploited where available, but they aren't needed to safely execute a mission. Finally, pre-existing maps are welcomed but are simply viewed as that, a nice-to-have. ExynAI’s “infrastructure-free” nature coupled with its platform-agnostic design and decentralized middleware for scaling to multi-robot operations makes it uniquely suited to address a number of challenging use cases!

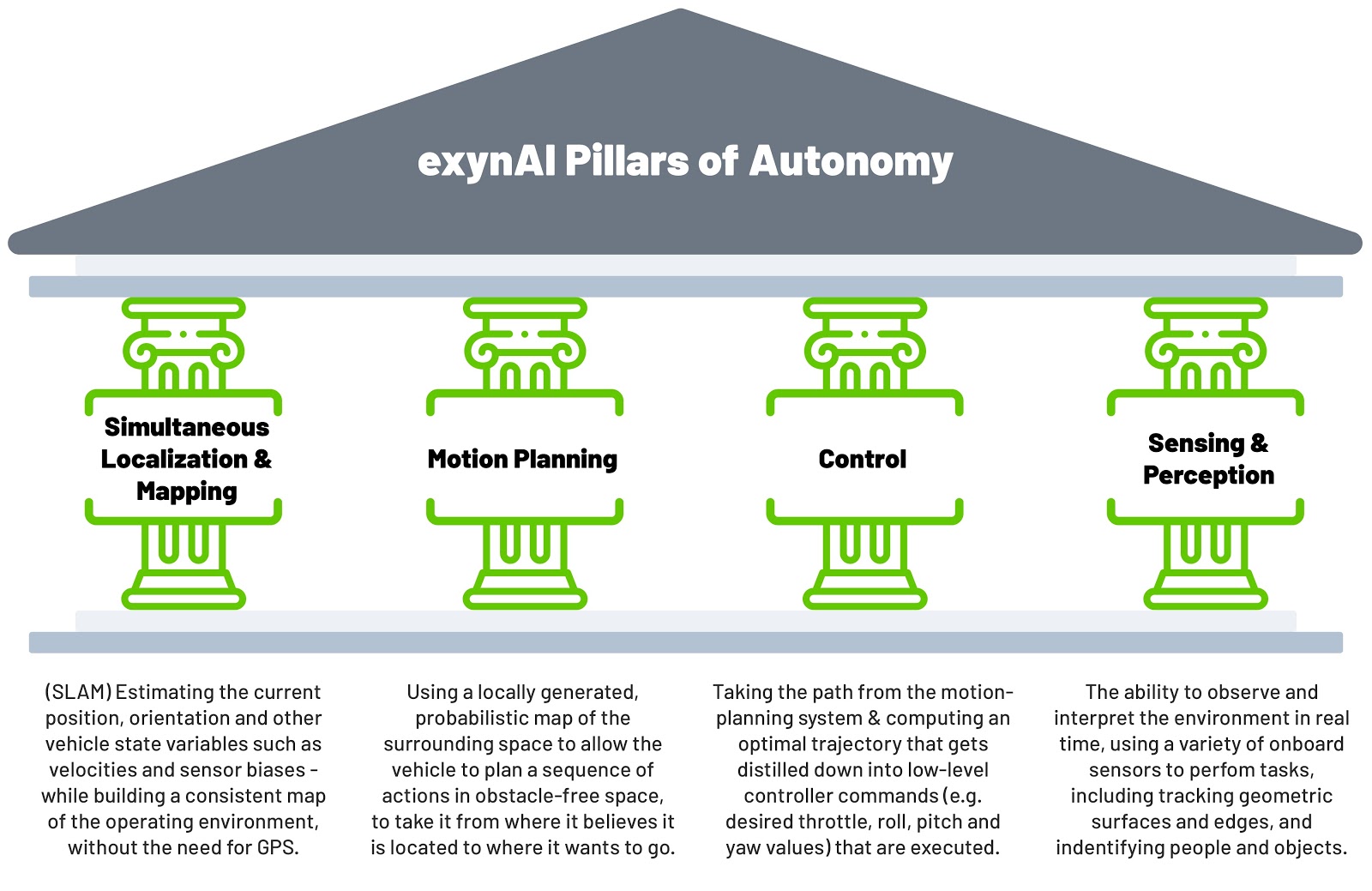

ExynAI software implements what we call the four pillars of autonomy. However, before stating them more concretely, let’s take a step back and consider a simple toy scenario. Imagine you are a robot that has suddenly awoken (you have just been powered on by your operator in real-life) and you’ve successfully initialized all of your sensors and passed your health checks. You immediately start seeing the world as nothing more than a collection of sparse “points” given by your eyes (in this case, your 3D LIDAR system). You also have an inertial measurement unit to help you roughly sense your motions and any changes in direction. Unfortunately, there isn’t a way for anyone to tell you where you are and now you have just been asked to visit and map a spot far away (much farther than you can see!). What are you supposed to do?

Being a robot is hard. After some careful CPU cycles, you realize that in order to actually go anywhere you first need to know where you are located. Additionally, you realize that even if you do know where you are you still need to figure out a reliable way to get to where you want to be. That’s all good, but how do you avoid obstacles along the way? Suddenly, you realize that you need to know what it is you are actually seeing! In other words (loosely speaking), you, like every other robot need to answer the following four fundamental questions:

You sit perplexed wondering how to answer any of these questions. Fortunately, you realize that you are in fact a robot powered by ExynAI and you now have all the answers!

Illustrative example aside, from a technical perspective, answering these questions is quite challenging under the assumptions that we have made for our conditions of operation. The complexity of addressing them is even further highlighted by the realization that although separate and distinct, their answers are not necessarily so. For instance, for any robot to understand where it is in the world it may first need to know what it is actually seeing. Similar to how a tourist in Manhattan could use a skyscraper as a landmark to help them navigate.

The answers to these questions reflect what we call the four pillars of autonomy, as visualized in the below graphic -- they provide the foundation upon which ExynAI’s software framework is constructed. Most notably, the ExynAI task and mission planning system (as described in the next section) relies squarely on each of these building blocks. Without any one of them, Exyn’s signature mission planning and task execution would not be possible! In other words, these pillars are what enables push-button, truly autonomous operation.

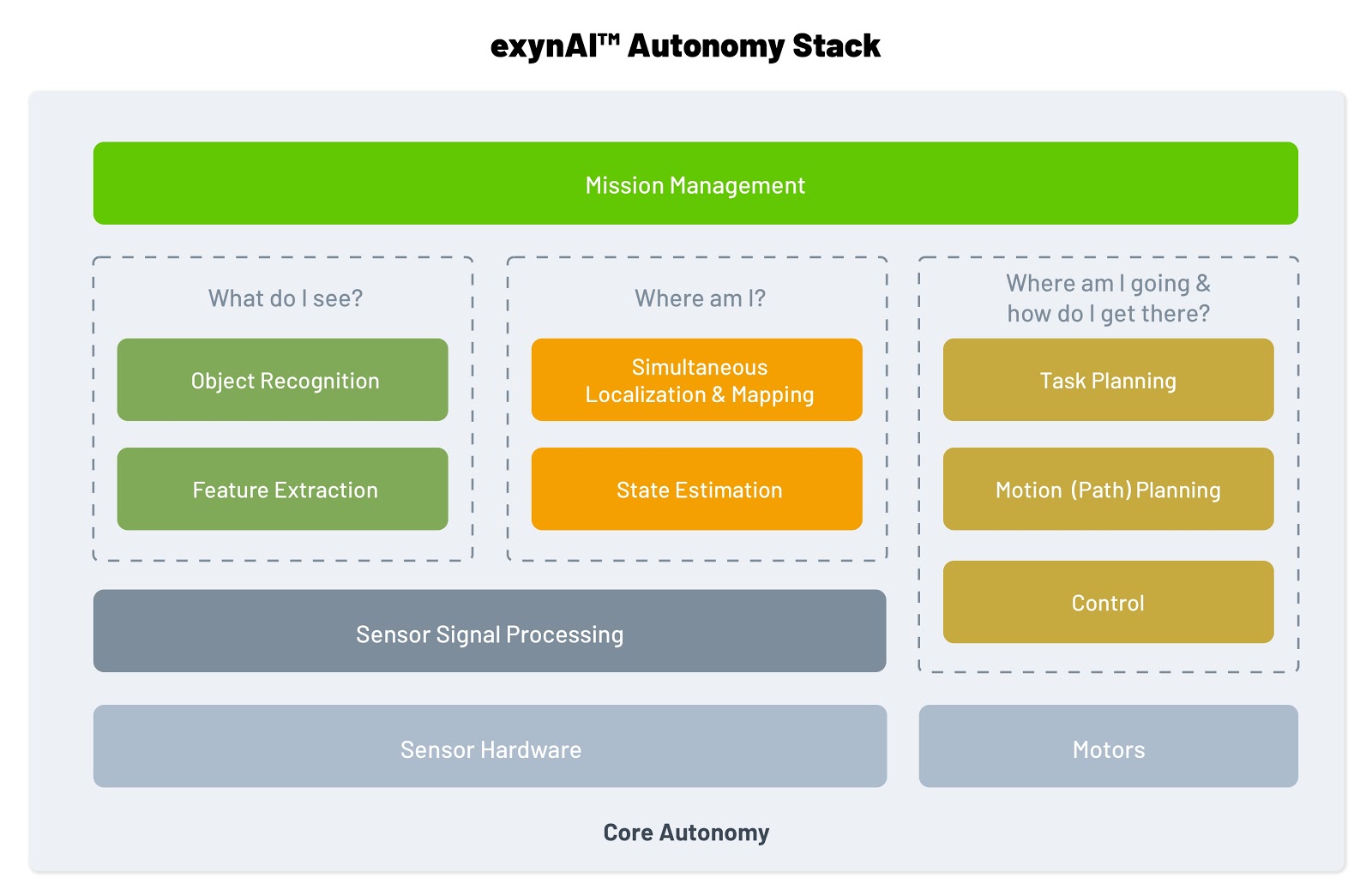

The core pillars of autonomy can be arranged into what we as engineers call “stacks”. Each layer in a stack is composed of elements occupying the layer or layers beneath it and in doing so it provides an additional level of abstraction within the system. An oversimplified version of the ExynAI autonomous system stack would look something like this.

Although the system is layered in this manner, information propagates both laterally and vertically across the various components. For example, the motion (path) planning leverages the current state reported by the state estimation in order to seed its computation of a minimal cost path to the desired goal. Similarly, if the goal given to the motion planner is unreachable (e.g., in a wall), it will report it to the task planner which will either determine the next task to execute or report a mission-critical failure to the highest level module.

A key advantage of using Exyn’s robots is their ability to conduct “missions” autonomously, with zero to minimal input from the end-user. The best way to think about a mission is as a sequence of “tasks” that the robot is being asked to execute in a best-effort manner. Tasks are really nothing more than just primitive behaviors like “Take-off”, “Land”, “Fly to that location” or “Explore around here”.

Although an end-user can ask the robot to carry out a mission, the robot ultimately has the final say in what it is willing to do. This may seem somewhat surprising, but it is the robot’s ability to continuously estimate risk and determine safety that makes it so unique. In the context of our robots, “safety” is measured by considering a number of factors that include everything from proximity to nearby obstacles to ensuring enough battery for a return home. For instance, if as part of a mission you inadvertently ask the robot to visit a location that is physically impossible for it to reach, it will make that determination and skip that part of the mission before moving onto the next task in its queue or returning home. It is for this reason that every task is viewed as a request more than a command and it is also why we describe the robot’s operation as best-effort. It’ll do its best to complete a task subject to real-world safety constraints.

Missions are provided to the robot as “mission specifications” which are generated from the graphical inputs given by the end-user via a connected tablet interface. Generally, the system supports two types of missions. The first is what we call Smart Point-to-Point where the vehicle is asked to visit a set of sparse locations or “objectives” while avoiding obstacles and hazards along the way. The mission, in this case, is simply inputted by tapping the desired locations on the screen. The second mode is what we call Scoutonomy, which is full-blown 3D exploration. In this case, the end-user draws a volumetric bounding box around the space that he or she would like the robot to map. The robot then sets off exploring the space by choosing locations that maximizes the amount of information gain.

The key though is that once the end-user provides the mission specs and instructs the robot to begin the mission, the ExynAI software takes over. From take-off to landing, the system is fully autonomous. No pilot needed!

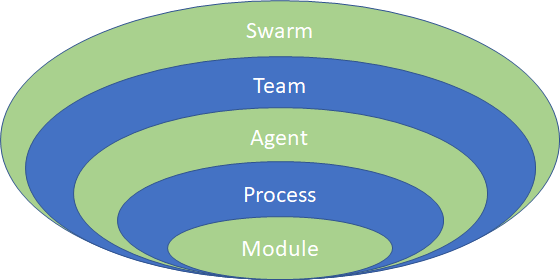

All of the capabilities afforded by ExynAI live within a proprietary software framework built upon industry-standard libraries and best practices. This framework is modeled around a natural hierarchy of system elements that dictate how software components are defined, composed, and executed. The hierarchy is illustrated in the following figure.

In ExynAI, the basic software unit is a Module that implements some particular algorithm (E.g., a particular path planning algorithm). Modules are combined into Processes that are inherently responsible for their timely execution. A collection of Processes can be combined to define an Agent and a collection of Agents can work together as a Team. Generally speaking, you can interpret an Agent as being all of the software processes that execute on a particular robot.

All Modules implement a well-defined message-passing API that allows them to communicate and interact with other Modules in the system. In general, communication comes in two flavors: low-latency shared memory messaging (useful when Modules need to share large chunks of data like dense maps!) and standard IP-based network communications (both reliable and unreliable). Accordingly, the communications middleware is fully decentralized and does not require a broker to manage the flow of messages. Both publish/subscribe and request/reply messaging models are supported.

All flight-critical software (software that executes on the robot) is written using C/C++. The choice is driven by the real-time nature of the Processes. As opposed to other languages that introduce abstractions that inherently slow down the execution of the implementation, C/C++ allows the code to be “closer to the hardware” and ultimately faster!

From the perspective of the end-user, ExynAI provides a number of unique capabilities that set it apart from other systems on the market.

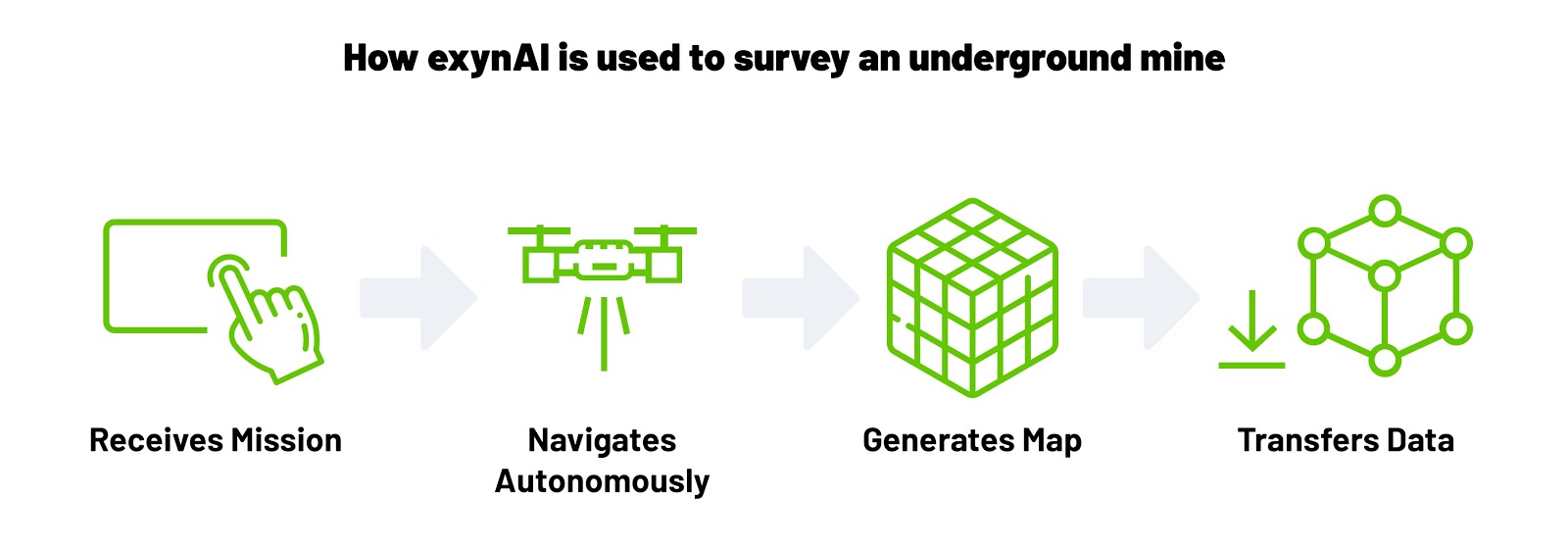

To give you a better understanding of how all of this comes together in the real world, here's an example of how one of our robots would be used in a mining environment. Let’s say that during the mission planning phase, the operator decides they’d like to determine the viability of an old mine by surveying existing stopes (large underground cavities created by previous excavation operations). Let’s also assume that the operator already knows the approximate location and quantity of these stopes. Using the Exyn tablet, they simply tap on the stopes’ locations, then tab another button on the screen to start the mission.

The drone takes off, and using a variety of onboard sensors, ExynAI starts generating a voxelized, localized Minecraft-like map of the environment as it flies. Taking into account real-world obstacles such as walls and mining equipment, each voxel is given an “occupied” or “unoccupied” state. ExynAI then issues commands to the drone, to navigate the environment while avoiding areas where there are occupied voxels.

As the drone approaches and maps out the first stope, it continues along a path to the remaining stopes -- however, during this entire time the software employs what we call “smoothing” to optimize the drone’s path. This reduces the amount of throttle and directional changes needed, ultimately extending mission battery life.

In parallel -- while the drone is generating data-rich voxelized (dense) maps -- it’s also using onboard LIDAR to create high-resolution online maps that enable the mine operator to visually inspect the stopes. All of this localized and online mapping is performed in real-time, and periodically the ExynAI software will automatically compare the local and online maps to further optimize navigation during the mission.

ExynAI is a culmination of several years of hard work across our entire team, with roots in Penn’s GRASP engineering lab. With its modular approach to autonomy, we’ve designed it to be adapted to virtually any enterprise-grade robot for a variety of industrial applications across the mining, logistics, and construction sectors.

To learn more about Exyn for mining, click here.

To learn more about Exyn for logistics, click here.

To learn more about Exyn for construction, click here.