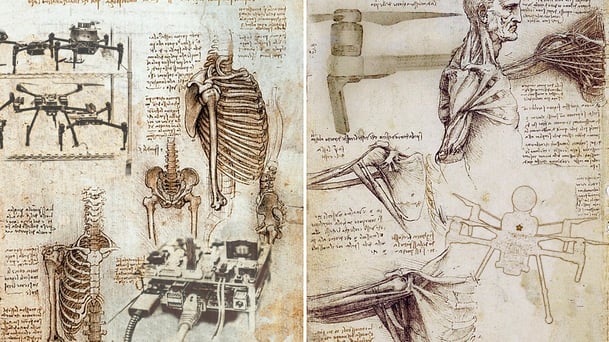

You'll never look at an aerial robot the same after this robotic dissection

It's easy to think of an Exyn robot as one single entity when we see it flying around the office or in an underground cavity. But if we were to look under the hood, we would see a kaleidoscope of wires, sensors, motors, and countless other gizmos that all come together in an elaborate dance of aerial autonomy.

So as a thought experiment, we wanted to "dissect" one of our robots and see how each of its components compared to the human body. Because despite their carbon fiber arms and legs, our robots emulate what we, as humans, are doing: perceiving their environment, thinking about how to traverse through it quickly and efficiently, and building a mental map for future reference.

Or in other words, we designed out autonomy to act like a human brain so you can keep yours out of the loop. In this piece, we take a closer look at the Exyn “brain” anatomy.

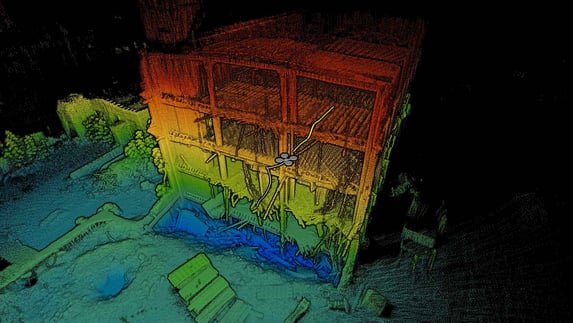

One of the most important sensors on the robot is its "eyes", the Velodyne LiDAR Puck LITE. This gimballed sensor shoots out invisible beams of light that can generate 300,000 points per second and helps the robot understand where it is in free-floating space. But unlike our eyes, these can see in the dark because they don't rely on light in the visual spectrum.

The lasers the Puck LITE uses can work even in the darkest underground mine. And shooting those lasers hundreds of thousands of times per second all around the robot (don't worry, they're safe) helps it understand and navigate its environment. Without this highly detailed set of eyes, our robots would be flying blind. That's why we're a proud member of the Automated with Velodyne Ecosystem.

The "organ" we take for granted the most before it's too late? The batteries, or the "heart" of our robot. Those lithium cells pumping electrons through silicon arteries and capillaries to power our robots’ every action. Luckily, these organs are easily swappable and don't require any surgery on the robot.

Another crucial “sensing” component for autonomous aerial flight is our IMU or inertial measurement unit. You can think of this as our robot's inner ear. It helps it stay balanced, while also communicating its orientation and angular speed to the artificial intelligence and machine learning pipeline for further processing.

Internally we refer to the brain of the robot as the Flight Guide, powered by our proprietary artificial intelligence / machine learning pipeline, ExynAI. This is where all the sensor data comes together to enable the robot to make decisions about its current mission in relation to the world it can see through its sensors.

Once the brain makes a decision, it starts sending signals out to low-level flight controllers, electronic speed controllers (ESC), and other motors that react accordingly. Think of these like the nervous system and muscles of the robot. The flight controller, like our nervous system, interprets signals from the flight guide and sends them to each necessary component. Then the ESCs, like muscles, interpret those broad signals into individual actions for each motor.

The legs are, well … just the legs. They're crucial for allowing the robot to land safely but they're not tied into the "brains" of the operation.

Sound complicated? It is! Just like the intricacies of the human body. Take, for example, the simple act of picking up a glass of water. What might seem like a simple act of hydration is actually a complex web of electrical signals, synapses, coordination, and muscle fibers. Your brain must have the spatial awareness to locate the glass in 3D space, then send signals to your muscles that must interpret them into steady movements so you won't shatter the glass on the floor or spill water all over yourself.

While we're only showing you our DJI platform, we designed ExynAI to be platform agnostic so we can add aerial autonomy to any number of drone systems. We're like a nicer version of Young Frankenstein, swapping brains into different drone bodies. Luckily the drones we're creating are completely and easily controlled via a tablet, and the torch-carrying mob is just trying to get a better look at your high-fidelity data sets.

Ready to see the world through the eyes of a robot? Sign up for a virtual demo today.